We motivate Energy-Based Models (EBMs) as a promising model class for continual learning problems. Instead of tackling continual learning via the use of external memory, growing models, or regularization, EBMs change the underlying training objective to causes less interference with previously learned information. Our proposed version of EBMs for continual learning is simple, efficient, and outperforms baseline methods by a large margin on several benchmarks. Moreover, our proposed contrastive divergence based training objective can be applied to other continual learning methods, resulting in substantial boosts in their performance. We further show that EBMs are adaptable to a more general continual learning setting where the data distribution changes without the notion of explicitly delineated tasks. These observations point towards EBMs as a useful building block for future continual learning methods.

Energy landmap

Energy landmaps of Softmax-based classifiers and EBMs after training on task T9 and T10 on permuted MNIST. The darker the diagonal is, the better the model is in preventing forgetting previous tasks.

Predicted label distributation

Predicted label distribution after learning each task on the split MNIST dataset. The Softmax-based classifier only predicts classes from the current task, while our EBM predicts classes for all seen classes

Confusion matrices

Confusion matrices between ground truth labels and predicted labels at the end of learning on split MNIST (left) and permuted MNIST (right). The lighter the diagonal is, the more accurate the predictions are.

Testing curve along training

Class-IL testing accuracy of the standard classifier (SBC), classifier using our training objective (SBC*), and EBMs on each task on the split MNIST dataset (left) and permuted MNIST dataset (right).

Boundary-aware setting

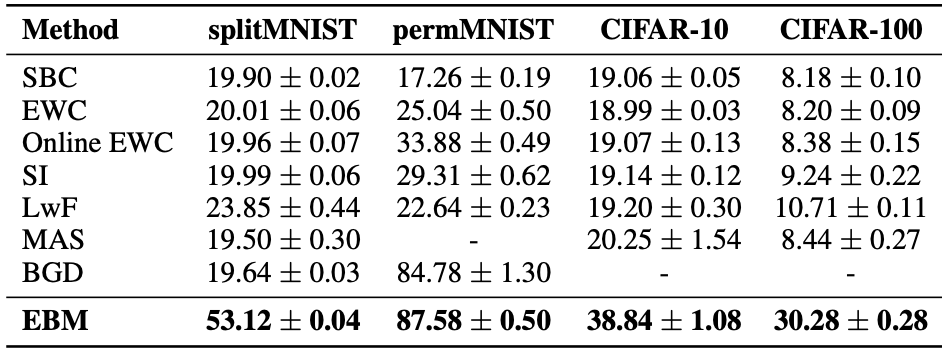

Evaluation of class-incremental learning on the boundary-aware setting. Test accuracy on four datasets is reported. Each experiment is performed at least 10 times with different random seeds, the results are reported as the mean/SEM over these runs. Note our comparison is restricted to methods that do not replay stored or generated data.

Boundary-agnostic setting

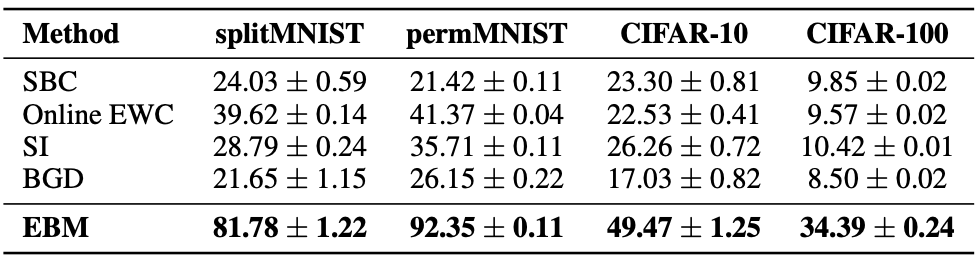

Evaluation of class-incremental learning performance on the boundary-agnostic setting. Each experiment is performed 5 times with different random seeds, average test accuracy is reported as the mean/SEM over these runs. Note that our comparison is restricted to methods that do not replay stored or generated data.

Related Projects

Check out our related projects on utilizing energy based models!