We introduce iterative reasoning through energy diffusion (IRED), a novel framework for learning to reason for a variety of tasks by formulating reasoning and decision-making problems with energy-based optimization. IRED learns energy functions to represent the constraints between input conditions and desired outputs. After training, IRED adapts the number of optimization steps during inference based on problem difficulty, enabling it to solve problems outside its training distribution — such as more complex Sudoku puzzles, matrix completion with large value magnitudes, and path finding in larger graphs. Key to our method’s success is two novel techniques: learning a sequence of annealed energy landscapes for easier inference and a combination of score function and energy landscape supervision for faster and more stable training. Our experiments show that IRED outperforms existing methods in continuous-space reasoning, discrete-space reasoning, and planning tasks, particularly in more challenging scenarios.

Our approach can solve a set of continuous algorithmic tasks such as matrix addition, matrix inverse and matrix completion. Below, we illustrate the error map of the predictions of our approach on the matrix inverse task as we iteratively optimize each energy landscape.

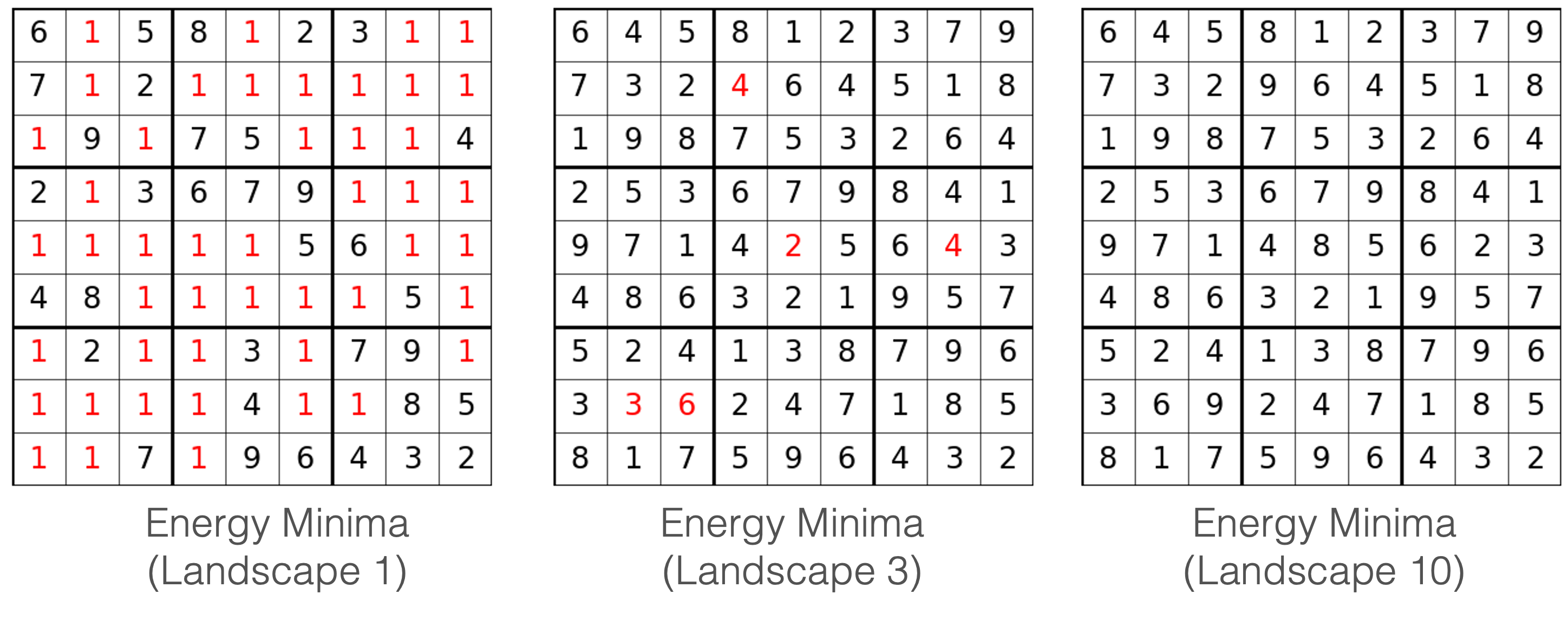

Our approach can also solve discrete reasoning tasks such as Sudoku. Below, we illustrate the predicted solutions to the Sudoku problem as we run optimization over additional energy landscapes.

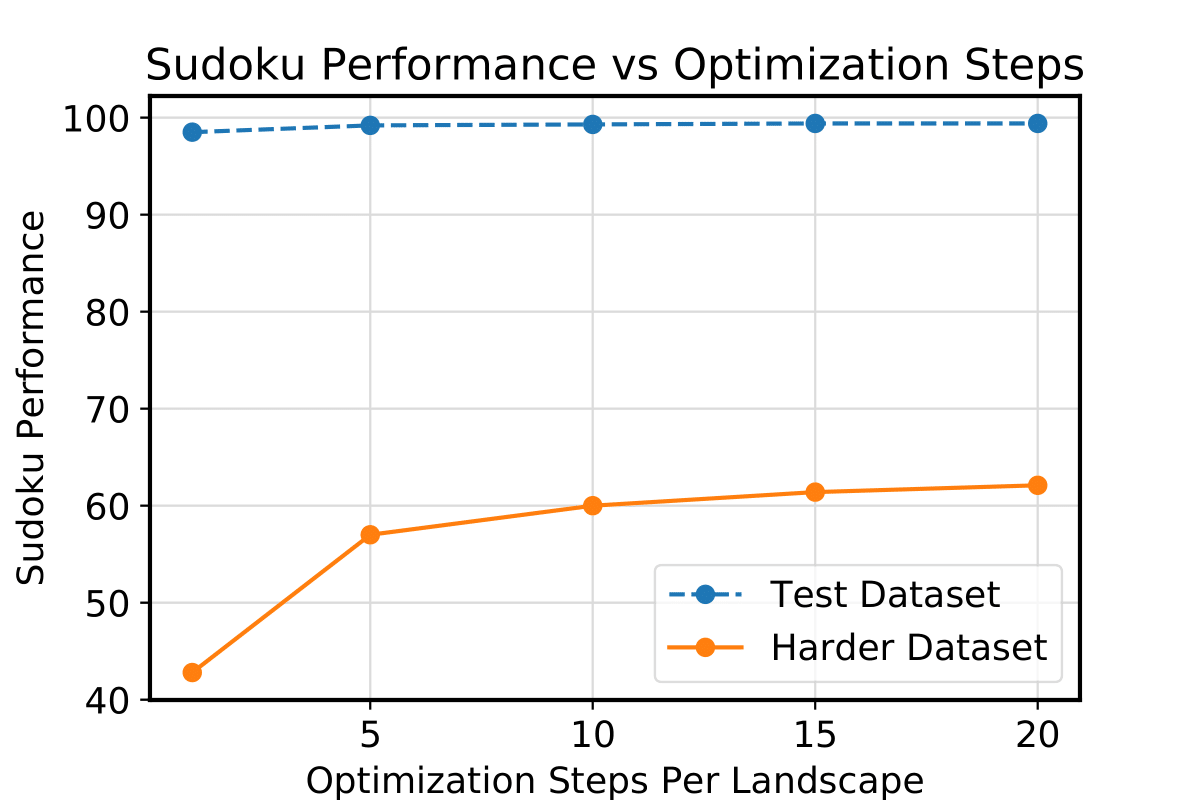

When generalizing to harder variants of Sudoku (with fewer numerical entries given), we can leverage additional test-time optimization iterations to obtain the final more accurate answer.

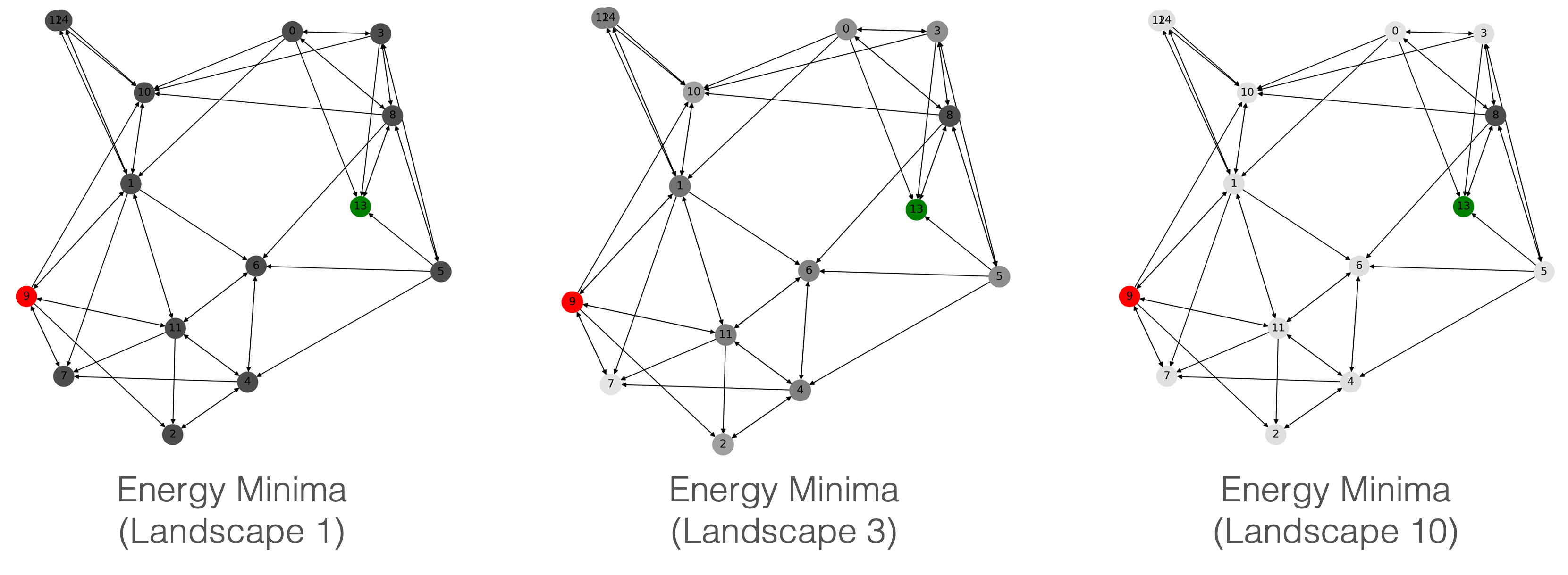

Finally, our approach can also solve planning problems. Below, we illustrate how IRED enables us to find the correct next action to take in a graph given start node (green) to a goal node (red).

@InProceedings{Du_2024_ICML,

author = {Du, Yilun and Mao, Jiayuan and Tenenbaum, Joshua B.},

title = {Learning Iterative Reasoning through Energy Diffusion},

booktitle = {International Conference on Machine Learning (ICML)},

year = {2024}

}